TL;DR – USB-C with PPS (Programmable Power Supply) technology is here, it’s cool, and now it’s usable on more than just the newest smartphones – it works on almost any Li-ion battery with the right USB-C tester. Check out the GitHub repo – it’s open-source!

As seen on Hackaday!

DISCLAIMER: Lithium-ion batteries can be dangerous if mishandled or abused! While much testing and development has resulted in a fairly stable implementation, this application is still an “off-label” use of a USB PD charger and USB-C tester, and therefore there is a risk of property damage, personal injury, or other unforeseen consequences if something goes wrong (and I will NOT be held responsible for that!).

Introduction

The USB PD (Power Delivery) standard has revolutionized how we charge our everyday electronic devices. While many new devices (such as smartphones) emphasize “fast charge” capability, it has required changes to both the device itself, as well as the adapters that power them.

Because power losses in the charging cable (or any other resistance) is directly related to the amount of current flowing through it, one can get more power for the same amount of current by increasing the voltage (this is how the AC power transmission lines that you see on the street and countryside work as well). The USB PD standard allows a device to request the adapter to increase its output voltage from 5 volts to a higher level, such as 9, 15, or 20 (and sometimes 12) volts.

An addition in the third version of the USB PD standard added optional PPS (Programmable Power Supply) support for the power adapter, which allows an adapter to output a wide range of voltages, rather than a fixed “menu” of 5, 9, 15, 20 (and sometimes 12) volts.

What is USB-C PPS charging?

PPS charging is a relatively new variation to the existing PD charging scheme. It allows the device being charged to actively communicate with the adapter and request a precise voltage (and current) level, increasing efficiency since DC-DC conversion efficiency drops when the difference in voltage between the input (the adapter) and output (the battery) is larger. With this bidirectional communication, it allows the adapter to share some of the regulation workload, and therefore move some of the heat-generating power conversion to the adapter, allowing the device’s battery to charge at a cooler temperature which improves its lifespan.

Direct Battery Charging

One step past normal PPS charging is direct charging, which completely offloads the power management to the adapter; the host device controls the charge process purely via software commands to the adapter. This is analogous to the DC fast charging method used in electric vehicles (EVs), which also perform all power regulation outside of the vehicle and its internal charge circuitry. Because the device no longer needs to perform its own DC-DC conversion, this skips a step in the power conversion “chain”, increasing efficiency and reducing the amount of heat dissipated in the host device (you can’t lose power on a conversion step if you never perform that step in the first place!). With this reduction in internally-generated heat, the battery can be charged at even faster rates than it could with previous charging schemes.

One barrier to the widespread adoption of direct charging is that it requires the device to explicitly support it, as it requires significant changes to how the “power path” is designed internally. It also requires the adapter to support the voltage and current levels required by the host.

Diagrams comparing normal versus direct charging schemes. (click here for full-size image)

Thankfully, on the adapter side, PPS support is becoming more and more common, even though most devices on the market (at least at the time of writing) don’t support it. However, because that potential is already present, perhaps there’s a way for the electronics hobbyist to take advantage of direct charging for their own batteries…

Shizuku Platform and its Lua API

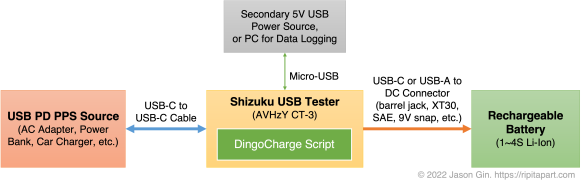

The Shizuku USB multimeter by YK-Lab, which is available in a few rebadged forms under names like YK-Lab YK001, AVHzY CT-3, Power-Z KT002, or ATORCH UT18, is a very useful USB charger testing device. It features USB-A and USB-C ports; can measure voltage, current, power; can calculate passed charge (mAh) and energy (mWh) in real time; but unlike most USB testers on the market, it is user-programmable in Lua, a lightweight programming/scripting language.

The Lua API on the tester provides a large amount of extensibility beyond the original tester’s design, and in my case I’m using its ability to “trigger” non-standard voltages from fast-charge capable power adapters as well as its colour LCD screen to act as a highly flexible direct charger – an intermediate between a USB-C PD PPS adapter and an arbitrary (Li-ion) rechargeable battery.

Introducing: DingoCharge!

The idea behind making a battery charging script came about when I looked at using bench-top adjustable power supplies to charge batteries. Since the Shizuku tester (in my case, it was the AVHzy CT-3 variant) allows me to use the PPS protocol to finely adjust the adapter’s output voltage, I tried manually varying the voltage to increase or decrease the current flowing into a battery – and it worked!

That said, constantly checking the battery’s current and voltage levels just to charge a battery gets pretty tiring, and since the Shizuku is Lua-programmable… why not make a script that automates all of this for me? Thus began a programming journey that’s been ongoing for over a year, and I’ve still got ideas to add even more functionality (as long as I don’t crash the tester by running out of memory… don’t ask me how I know).

Charge Regulation Algorithm

All lithium-ion batteries (including less-common ones like lithium-iron phosphate (LiFePO4) and lithium titanate) use what’s known as CC-CV charging; this stands for constant-voltage/constant-current. A fixed amount of current is fed into the battery until its voltage reaches a certain threshold, then the voltage is held at a fixed value until the current going into the battery drops below another, lower, threshold. Once this is reached, the current is turned off entirely and the charge process is complete.

Although the PPS specification allows a device to set a maximum current level, my own testing revealed that there was too much variation amongst all my different adapters that I could not rely on the hardware to perform the constant-current regulation with enough precision for my liking, and the voltage drop across the USB-C cable resulted in the battery’s charge current tapering off too early as the voltage at the adapter reached its programmed constant-voltage level before the battery’s own voltage had a chance to do so as well. Instead, my charge algorithm increases the requested USB PD charge voltage above the battery’s own voltage until the desired current level is within a certain deadband range.

Once the target constant-voltage level is reached, the charge algorithm switches to constant-voltage regulation, maintaining a preset voltage until the current being drawn by the battery falls below the termination threshold.

If a battery was previously overdischarged, it requires a slower “precharge” current to bring the battery’s voltage up to a level that is safe for its regular charge current.

Charge Safety Tests

Over time, I added more safety checks to ensure that the battery’s state was maintained within a safe margin for voltage, current, temperature, and also time. If a safety violation was detected during charging, the charging algorithm would automatically set its charge current to zero, effectively terminating the charge process. Additionally, if the charge process was finished but a small current flowing into the battery resulted in the battery’s voltage getting too high, the PD request voltage gets adjusted downwards to prevent an overvoltage condition from occurring.

Downstream Resistance Compensation

I also added the ability to compensate for high-resistance connections to the battery. This works by using Ohm’s Law (voltage = current * resistance) to boost the voltage thresholds in proportion to the amount of current flowing into the battery, as determined by a configured downstream (tester-to-battery) resistance. This is usually considered an advanced feature for a charging system, but since all of the charging is handled in software, it is relatively trivial to allow for compensating for lossy cabling and connectors.

Charging Test

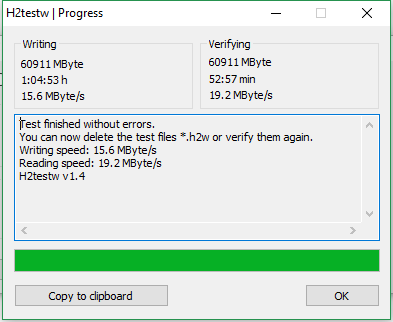

As a real-world demonstration of the DingoCharge software, I created a test setup that charged a 600mAh/7.4V nominal battery pack (a rechargeable 9V alternative that’s built from disposable vape batteries – stay tuned for that blog post!) at a 1C (600mA) rate at 8.4V (4.2V per cell) until the current tapered off to C/10 (30mA); note that the pack I was charging was built with high-drain cells that can handle higher currents than normal, and most commercial cells are best charged at a C/5 to C/2 rate. I used a Samsung EP-TA800 USB-C adapter, capable of a maximum of 25W at full power (11V at 2.25A).

Demonstration of DingoCharge charging a 9V Li-ion replacement, using a Samsung 25W USB-C PD adapter. (click here for full-size image)

Block diagram describing the DingoCharge hardware setup. All power conversion (and associated power conversion losses) occur only in the power source, with the tester simply issuing commands to control the power going into the battery. (click here for full-size image)

Voltage/current plot of DingoCharge charging a battery at 600mA and 8.4 volts, using a Samsung EP-TA800 USB-C PD adapter. (click here for full-size image)

The classic CC-CV charge profile is visible when the charging current and voltage is graphed over time. The stepped nature of the current is a consequence of the PPS voltage being limited to 20mV granularity, causing jumps in current draw as each step occurs. The voltage is increased or decreased when the current or voltage being sent to the battery falls out of a certain range (in this case, 600mA and a +/-25mA deadband during constant-current charging, and 8.4V +/- 10mV during constant-voltage charging).

One contribution to the relative flatness of the constant-voltage phase is that the charging algorithm is essentially performing four-wire (also known as Kelvin) sensing from the adapter to the tester, inherently compensating for voltage drop across the USB-C cable. This is also why the current flutters a bit as it tapers off, as the voltage at the battery begins to rise above the deadband, resulting in a small amount of oscillation as the algorithm tries to maintain 4.2 +/- 0.01 volts.

Limitations

Nothing in this world is perfect, and neither is my charger implementation. In fact, trying to shoehorn a battery charger into what’s effectively a multimeter and a “wall wart” required a lot of compromises to be made.

No Power Switching

Charge termination is a bit tricky, as most implementations will just electrically disconnect the battery. However, with the tester, there is no power switching available that can be controlled through software. This is mitigated by setting the constant-current algorithm to 0 amps +/- 10 milliamps, resulting in minimal charge/discharge current as the battery voltage drops while it relaxes from a fully-charged state.

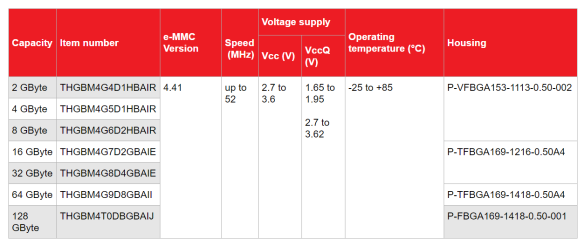

One Name, Many Variations

Another limitation is that the PPS protocol allows adapters to not necessarily support the full voltage range of 3.3 to 21 volts. Instead, there are options to support up to 5.9, 11, 16, and/or 21 volts, and at current level up to 5 amps. The large disparity of supported voltages and currents means that the DingoCharge script needs to check the programmed charge parameters against what the adapter supports; many PPS adapters only support up to 11 volts, so 3S (3 cells in series) and higher cell configurations will not work (and an error message will be displayed if that is the case).

My (Electro)chemical Romance Recharge

I designed DingoCharge only for use with lithium-ion type battery chemistries, but have been experimenting with charging other rechargeable batteries, such as lead-acid and nickel-metal hydride/Ni-MH. There has been promising results with both chemistries, but each one is not fully supported as I have not implemented proper 3-stage charging for lead-acid batteries; Ni-MH charging only works by using a low current (C/10 typical) for a fixed period of time via DingoCharge’s time limit feature, or an external temperature sensor alongside the overtemperature protection feature to stop the charge process (a very crude delta-T/change-in-temperature algorithm). Thankfully, the software-based approach of DingoCharge means I can add this functionality in an official capacity with further research and development work… as long as I get around to it 😛 .

Lua Lunacy?

Additionally, this was my first real foray into Lua programming, so I’m pretty certain I’ve made some poor stylistic and other programming choices along the way. For all I know, it could have inherited some significant syntactic defects from my other programming (language) attempts that make it an “awful” program – but hey, it’s an open-source project so if you have some pointers, feel free to help contribute on GitHub (link is in the Downloads section below)!

Conclusion

The USB PD protocol, and its adjustable PPS functionality that was introduced in the PD 3.0 specification, provides a lot of potential use for directly charging batteries since it skips the usual conversion step in a device. However, this type of charging technology was largely untapped by many devices, with only a few smartphones (as of the time of writing) supporting it.

The scriptability of some USB-C testers like the AVHzY CT-3 or Power-Z KT002 allows for a script to handle all of the intricacies of battery charging, while providing an easy-to-use yet highly flexible interface. Thanks to the DingoCharge script, any USB PD PPS adapter and supported USB tester can be used as a universal battery charger.

Downloads

The DingoCharge script can be found on my GitHub profile (https://github.com/ginbot86/DingoCharge-Shizuku), and is open-source under the MIT License. If you have a suitable USB PD PPS adapter and USB-C tester, I’d love to hear if/how well DingoCharge works for your batteries!